Parsedmarc on Kubernetes with Opensearch

What's in This Article

DMARC records help you police the rules you put in place via SPF and DKIM records. DMARC tells mail systems what rules to follow and who to forward reports to.

Third party tools to analyse DMARC are quite common, so if you're thinking of doing it yourself, don't. Life is too short to be wrangling DMARC reports.

But maybe you ignore the wisdom of random bloggers. People say you shouldn't lick power sockets or juggle chainsaws. After the hospital stay and with your remaining limbs, maybe you'd like to increase your life difficulty and deploy DMARC reporting on kubernetes, because... YOLO ? Then this is the article for you.

Parsedmarc

PARSEDMARC is an opensource DMARC parser written in python. It can read a directory with zipped or unzipped reports (eg: google will zip reports it send to you) or it can read an IMAP inbox and process reports as they arrive. Once those reports are parsed, it can output the analysis to a directory in JSON or CSV format or it can upload it to an Elasticsearch instance for visualisation in Kibana or Grafana.

Opensearch or Elasticsearch

Elastic recently changed their licensing, ceasing the opensource only version of Elasticsearch. They have their reasons, but I'll be using the opensource Opensearch. This is a fork of Elasticsearch 7.10.2 and Kibana 7.10.2. That does mean that deploying on K8s will be done without the aid of the Elastic Operator which is the more K8s idiomatic way of deploying.

What are we building?

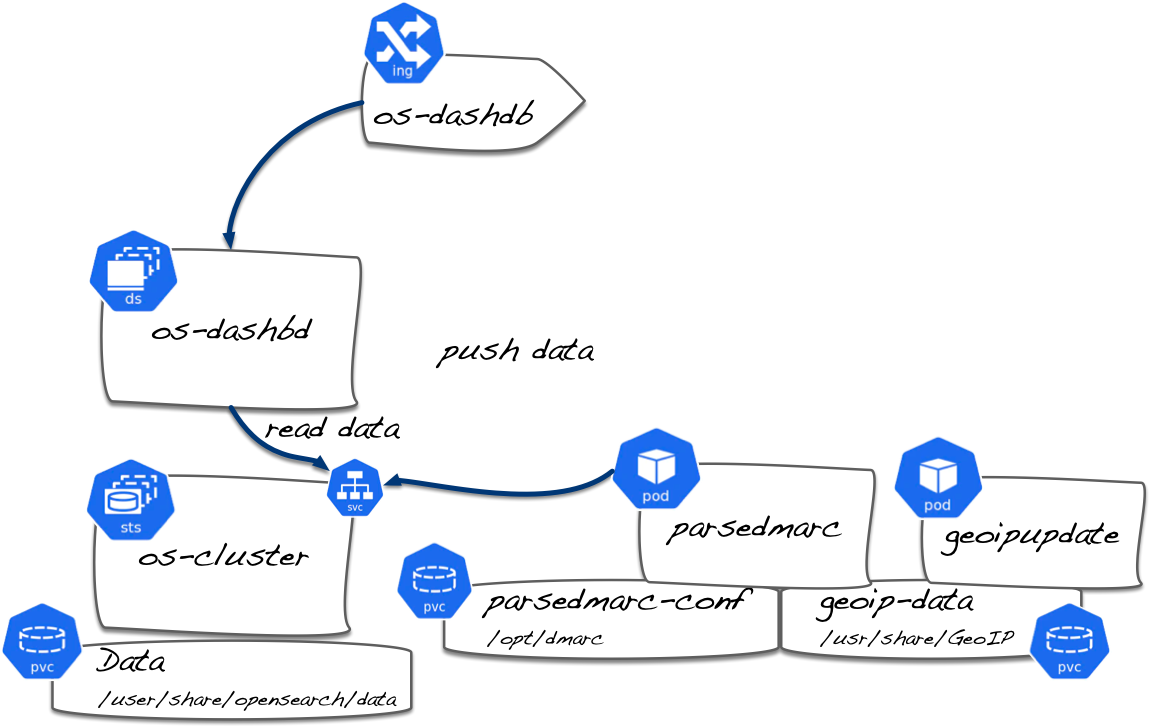

In order to process and visualise DMARC reports, we'll need the following:

- A

podwith a single parsedmarccontainer - A

persistentVolumeClaimfor storing the parsemarc.ini and any forensic samples we want on disk. - Maxmind's Geoipupdate

container. You'll need to sign up for a free account. - A

persistentVolumeClaimfor sharing the geoip database with the parsedmarc container. - An opensearch

StatefulSet - A

persistentVolumeClaimfor opensearch data storage. - An opensearch-dashboard

Deployment

Storage: I use an NFS StorageClass as my default. You can use whatever you wish, just make sure you have some kind of persistent storage option available.

Installing Opensearch.

Since we are not using the Elasticsearch Operator, we'll be deploying the old fashioned way. I tried converting the opensearch docker-compose file with Kompose, but I would've spent a lot of time fixing the resulting K8s manifest. It did provide some very good hints though.

The StatefulSet will need a headless service, so let's create that first. Note that clusterIP is set to None.

1# os-sts-svc.yaml

2kind: Service

3apiVersion: v1

4metadata:

5 name: opensearch

6 labels:

7 app: opensearch

8spec:

9 selector:

10 app: opensearch

11 clusterIP: None

12 ports:

13 - port: 9200

14 name: rest

15 - port: 9300

16 name: inter-node

1kubectl apply -f os-sts-svc.yaml

Now let's create the StatefulSet.

Many of the env variables are taken directly from the docker compose file, however the cluster detection is different. Since you don't typically have swap enabled on k8s nodes, I haven't disabled that explicitly in the container. We also can't set ulimits in the opensearch containers. So there are 3 initContainers which set ownership on the data directory, set a larger number of memory maps for the opensearch container and set the file limits.

1apiVersion: apps/v1

2kind: StatefulSet

3metadata:

4 name: os-cluster

5spec:

6 serviceName: opensearch

7 replicas: 3

8 selector:

9 matchLabels:

10 app: opensearch

11 template:

12 metadata:

13 labels:

14 app: opensearch

15 spec:

16 containers:

17 - name: opensearch

18 image: opensearchproject/opensearch:latest

19 resources:

20 limits:

21 cpu: 1000m

22 requests:

23 cpu: 100m

24 ports:

25 - containerPort: 9200

26 name: rest

27 protocol: TCP

28 - containerPort: 9300

29 name: inter-node

30 protocol: TCP

31 volumeMounts:

32 - name: data

33 mountPath: /usr/share/opensearch/data

34 env:

35 - name: cluster.name

36 value: os-lab

37 - name: node.name

38 valueFrom:

39 fieldRef:

40 fieldPath: metadata.name

41 - name: discovery.zen.ping.unicast.hosts

42 value: "os-cluster-0.opensearch,os-cluster-1.opensearch,os-cluster-2.opensearch"

43 - name: discovery.zen.minimum_master_nodes

44 value: "2"

45 - name: cluster.initial_master_nodes

46 value: "os-cluster-0,os-cluster-1"

47 - name: ES_JAVA_OPTS

48 value: "-Xms512m -Xmx512m"

49 initContainers:

50 - name: fix-permissions

51 image: busybox

52 command: ["sh", "-c", "chown -R 1000:1000 /usr/share/opensearch/data"]

53 securityContext:

54 privileged: true

55 volumeMounts:

56 - name: data

57 mountPath: /usr/share/opensearch/data

58 - name: increase-vm-max-map

59 image: busybox

60 command: ["sysctl", "-w", "vm.max_map_count=262144"]

61 securityContext:

62 privileged: true

63 - name: increase-fd-ulimit

64 image: busybox

65 command: ["sh", "-c", "ulimit -n 65536"]

66 securityContext:

67 privileged: true

68 volumeClaimTemplates:

69 - metadata:

70 name: data

71 labels:

72 app: opensearch

73 spec:

74 accessModes: [ "ReadWriteOnce" ]

75 storageClassName: my-storage

76 resources:

77 requests:

78 storage: 20Gi #If you're only storing parsedmarc reports, this can be much lower

1kubectl apply -f os-sts.yaml

Elasticsearch clients and UnsupportedProductError

Recently, Elastic changed the logic in their clients to detect the version of Elasticsearch they were connecting to and to break if connecting to any opensource edition, including older Elasticsearch servers. Parsedmarc does use the newer elasticsearch client and will break if we do nothing. The first thing we'll need to do is change the version opensearch replies with. We'll also need to change the client libraries later.

Using any container that can reach the Opensearch stateful set, change the configuration of the nodes via the API.

1kubectl exec mytools-cntnr -- curl -k -X PUT -H "Content-Type: application/json" -d '{"persistent": {"compatibility.override_main_response_version": "true"} }' "https://opensearch:9200/_cluster/settings" -u admin:admin

Yes, the default credentials are not secure. So update them now. We wont be using the admin user for any non-interactive activities, so changing the password won't break anything.

Installing Opensearch-Dashboard

The next step involves deploying a loadbalancer service for the OS Dashboard. This may differ depending on your k8s configuration.

1# os-dash-svc.yaml

2apiVersion: v1

3kind: Service

4metadata:

5 labels:

6 app: os-dashbd

7 name: os-dashbd

8spec:

9 ports:

10 - name: 5601-tcp

11 port: 5601

12 targetPort: 5601

13 selector:

14 app: os-dashbd

15 type: LoadBalancer

1kubectl apply -f os-dash-svc.yaml

Next we'll deploy the dashboard container. We configure the headless service for opensearch as the OPENSEARCH_HOSTS value.

1# os-dash-dep.yaml

2apiVersion: apps/v1

3kind: Deployment

4metadata:

5 labels:

6 app: os-dashbd

7 name: os-dashbd

8spec:

9 replicas: 1

10 selector:

11 matchLabels:

12 app: os-dashbd

13 template:

14 metadata:

15 labels:

16 app: os-dashbd

17 spec:

18 containers:

19 - env:

20 - name: OPENSEARCH_HOSTS

21 value: https://opensearch:9200

22 image: opensearchproject/opensearch-dashboards:latest

23 name: os-dashbd

24 ports:

25 - containerPort: 5601

26 restartPolicy: Always

1kubectl apply -f os-dash-dep.yaml

Create Users for Opensearch

With the dashboard ready, take a moment to create the user needed for parsedmarc to access the API. Call the user parsedmarc.

Install GeoIPUpdate

This is a good time to go and register for your free GeoLite2 account. You'll then need to create a License Key. Record the License Key details somewhere, you'll need them later.

We'll create the PersistentVolumeClaim to store the geoip database first.

1#geoip-pvc.yaml

2apiVersion: v1

3kind: PersistentVolumeClaim

4metadata:

5 name: geoip-data

6spec:

7 accessModes:

8 - ReadWriteOnce

9 resources:

10 requests:

11 storage: 100Mi

1kubectl apply -f geoip-pvc.yaml

Now we deploy Maxmind's geoipupdate container. You'll need to replace the relevant env values below with your account ID and license Key.

1#geoip-pod.yaml

2apiVersion: v1

3kind: Pod

4metadata:

5 name: geoipupdate

6 labels:

7 app: geoipupdate

8spec:

9 containers:

10 - name: geoipupdate

11 image: maxmindinc/geoipupdate:latest

12 env:

13 - name: GEOIPUPDATE_ACCOUNT_ID

14 value: 'MyAccountID' # This is a number

15 - name: GEOIPUPDATE_LICENSE_KEY

16 value: myLicenseKey # This is a string

17 - name: GEOIPUPDATE_EDITION_IDS

18 value: GeoLite2-ASN GeoLite2-City GeoLite2-Country

19 - name: GEOIPUPDATE_FREQUENCY

20 value: '72'

21

22 volumeMounts:

23 - mountPath: /usr/share/GeoIP

24 name: geoip-data

1kubectl apply -f geoip-pod.yaml

Installing parsedmarc

We'll need to create a docker container within which to run parsedmarc. We'll ensure that the version of Elasticsearch python module is one that does not have the 'oss' flavour check and will therefore function correctly. Create the parsedmarc.ini file

1#parsedmarc.ini

2[general]

3save_aggregate = True

4save_forensic = True

5nameservers = 1.1.1.1 8.8.8.8 9.9.9.9

6[elasticsearch]

7hosts = https://parsedmarc:PASSWORD@opensearch:9200

8[imap]

9host = imap.example.com

10port = 993

11watch = True

12delete = False

Next the python requirements.txt file

1#requirements.txt

2## Needed for compatibility with OSS versions of elasticsearch

3elasticsearch==7.13.4

4parsedmarc

Finally, use this Dockerfile. I'm using the official Python 3 container from dockerhub.

1#Dockerfile

2FROM python:3

3

4COPY requirements.txt ./

5RUN pip install --no-cache-dir -r requirements.txt

6

7COPY parsedmarc.ini /

Build the container and push to your favourite reachable container registry.

1buildah bud -t parsedmarc

2podman image tag localhost/parsedmarc docker.io/MYDOCKERHUB/parsedmarc:latest

3podman push docker.io/MYDOCKERHUB/parsedmarc:latest

We'll create the pvc to store configuration and forensic samples.

1#parsedmarc-pvc.yaml

2apiVersion: v1

3kind: PersistentVolumeClaim

4metadata:

5 name: parsedmarc-conf

6spec:

7 accessModes:

8 - ReadWriteOnce

9 resources:

10 requests:

11 storage: 100Mi

1kubectl apply -f parsedmarc-pvc.yaml

We didn't hardcode the IMAP credentials, in plain text because that would be very dumb. Unfortunately parsedmarc doesn't have a secure way of storing credentials you supply it. So in a production environment, be sure to restrict access to the parsedmarc pod and the parsedmarc-conf pvc.

We're going to add a secret which the parsedmarc container will ingest as environment variables.

1echo -n 'dmarc@example.com' | base64

2ZG1hcmNAZXhhbXBsZS5jb20=

3echo -n 'myp@$$w0rd' | base64

4bXlwQCQkdzByZA==

1# dmarc-secret.yaml

2apiVersion: v1

3kind: Secret

4metadata:

5 name: dmarc-secret

6data:

7 username: ZG1hcmNAZXhhbXBsZS5jb20=

8 password: bXlwQCQkdzByZA==

1kubectl apply -f dmarc-secret.yaml

Finally let's create the pod from our registry. We'll use dmarc-secret to create some env variables to complete our IMAP configuration.

1#parsedmarc-pod.yaml

2apiVersion: v1

3kind: Pod

4metadata:

5 name: parsedmarc

6 labels:

7 app: parsedmarc

8spec:

9 containers:

10 - name: parsedmarc

11 image: docker.io/MYDOCKERHUB/parsedmarc:latest

12 env:

13 - name: IMAP_USER

14 valueFrom:

15 secretKeyRef:

16 name: dmarc-secret

17 key: username

18 - name: IMAP_PASS

19 valueFrom:

20 secretKeyRef:

21 name: dmarc-secret

22 key: password

23 command: ["/bin/sh", "-c"]

24 args: ['cp /parsedmarc.ini /opt/dmarc/parsedmarc.ini; echo "user = ${IMAP_USER}" >> /opt/dmarc/parsedmarc.ini; echo "password = ${IMAP_PASS}" >> /opt/dmarc/parsedmarc.ini; parsedmarc -c /opt/dmarc/parsedmarc.ini']

25 volumeMounts:

26 - mountPath: /opt/dmarc

27 name: parsedmarc-conf

28 - mountPath: /usr/share/GeoIP

29 name: geoip-data

30 volumes:

31 - name: parsedmarc-conf

32 persistentVolumeClaim:

33 claimName: parsedmarc-conf

34 - name: geoip-data

35 persistentVolumeClaim:

36 claimName: geoip-data

1kubectl apply -f parsedmarc-pod.yaml

Viewing the data

Now that parsedmarc is sending summary information to opensearch, we should check it out.

Download the Kibana dashboard from the parsedmarc project.

Log in to the Opensearch dashboard and select the 'Add your data' option. From there select 'Saved Objects' and 'Import' the file you just downloaded.

You'll get the most value out of the DMARC Summary report when first viewing the data.

Get the code

All files shown in this post can be found here